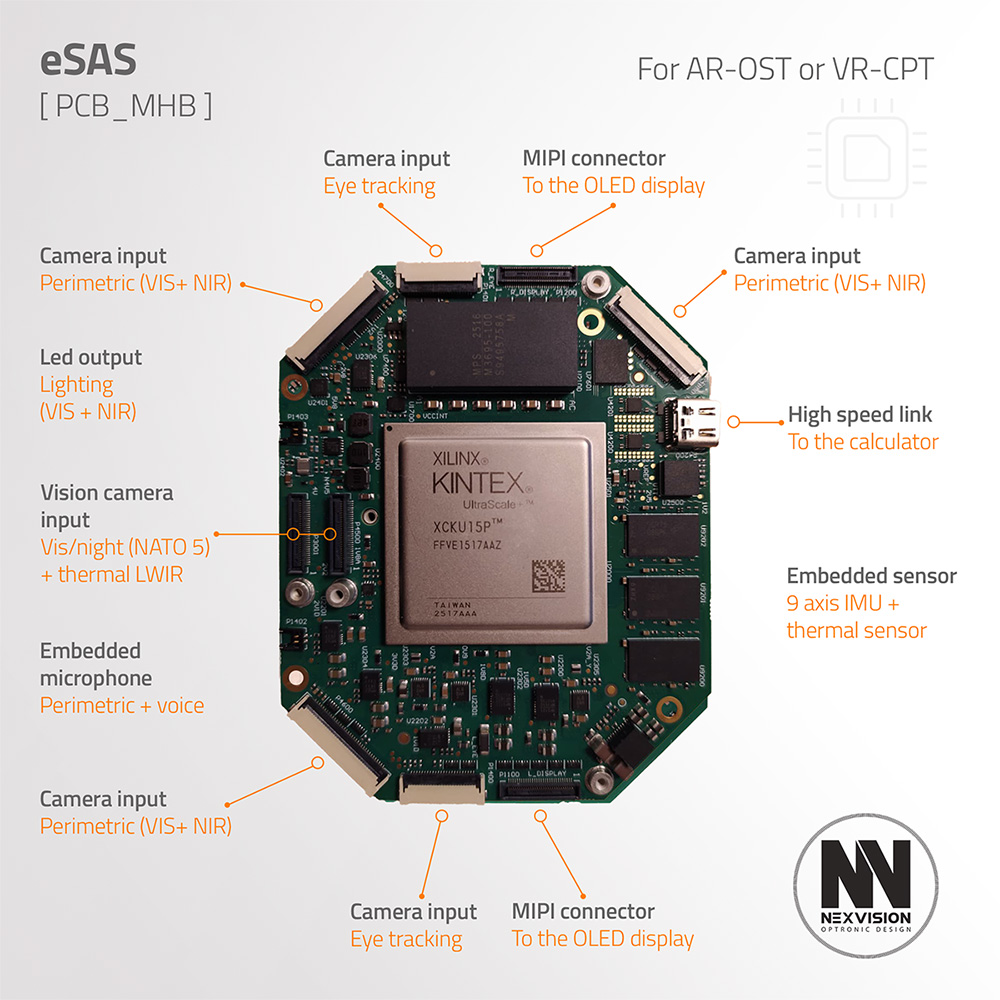

Daylight, night vision, thermal imaging, inertial data… In next-generation combat helmets, every sensor must operate in perfect synchronization. All data streams must be corrected, aligned, and fused within milliseconds — without adding weight or compromising soldier ergonomics. This is exactly the challenge addressed by the PCB-MHB board, one of the core technological building blocks of NEXVISION’s eSAS program.

An embedded board at the heart of the AR-OST helmet

Embedded directly inside the AR-OST (Augmented Reality – Optical See-Through) helmet, the PCB-MHB plays a critical orchestration role. It manages day and low-light imaging, night vision, thermal imaging, perimetric video cameras, as well as 9-axis inertial and temperature sensors. Such diversity of inputs requires extreme precision and ultra-low latency.

FPGA-based real-time processing: every millisecond matters

At the heart of the board lies a high-capacity FPGA, capable of ingesting and synchronizing all sensor streams with minimal latency. It also hosts a real-time processing pipeline dedicated to distortion correction and precise alignment between the real world and augmented overlays — a fundamental requirement for Optical See-Through AR systems.

Connected to the VPU (the soldier-worn embedded computing unit), the PCB-MHB supports advanced image analysis and mission-level instructions. At NEXVISION, every millisecond matters — and each building block brings eSAS closer to operational readiness.