What is an image sensor ?

What is it for ?

An image sensor is an electronic component that aims to capture and digitize images.

How does it work ?

To understand how an image sensor works, you first need to understand two things:

1. Light is composed of photons, i.e. small particles of energy created by a light source (e.g. sun, light bulb etc.).

2. It is possible to «convert» photons into electrons, which is called the photoelectric effect. In physics, the photoelectric effect (EPE) refers to the emission of electrons by a matter, usually metallic, when it is exposed to light or electromagnetic radiation of sufficiently high frequency, depending on the matter.

More specifically, an image sensor transforms light energy into electrical energy. It is composed of photosensitive cells, called photodiodes.

If a photon containing enough energy «hits» the photocathode, it will release an electron that will generate an analog electrical signal. The analog / digital converter will then allow this information to be digitized.

These photocathodes are arranged on the surface of the sensor in such a way that they form a matrix of pixels. This matrix gives us the definition of the sensor (for example an HD sensor comprises 1920 pixels wide x 1080 pixels high).

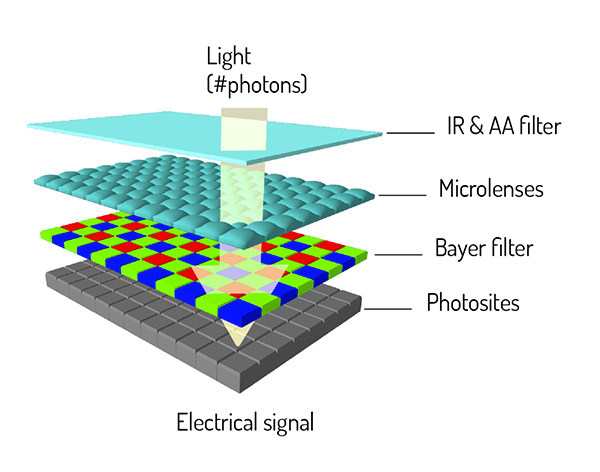

In addition, the sensor is equipped with a filter (often Infrared), microlenses (which can channel the light to the photosites) and a Bayer filter (which allows it to recover the color at the pixel level).

> Frequency band: UV / Visible / Night / VNIR / NIR / SWIR / MWIR / THERMAL LWIR / THz

> Manufacturing Technology: CCD / CMOS / EBCMOS

> Physical dimensions: length x width

> Resolution: image size in pixels (width x height) and pixel size

> Sensitivity: ability to capture high-quality images according to luminosity

> fps *: frame / second = the number of frames per second that the sensor is able to capture

> Type: Rolling Shutter / Global shutter, i.e. the way of exposing photosensitive cells [rolling shutter: display of lines one by one > spaces between the lines / saves transistor output and capa for each pixel, therefore much cheaper (easier to manufacture) / global shutter: everything displayed at the moment its being read]

A video is in fact, a succession of still images. By putting them end to end, we end up seeing a moving scene.

It takes about 25 frames per second for humans to see a video in a fairly fluid way. Anything under that is seen as jerky. Going beyond 25 frames / second can be very useful for slow motion. Remember those ads where we see a gently falling drop of water and then a playful multitude of mini drops? This is possible thanks to many images being captured in a very short space of time.

In other words, if you need to capture a scene in which objects move very fast (ex: Formula 1) and want to see, for example, exactly which 2 cars cross the finish line first (in case they pass the line at the same time),an image sensor that is capable of capturing a very large number of frames per second (for example, 60 to 100 frames per second) will need to be used so as to be able to scroll through the images frame by frame to get an accurate result.

Once the light is transformed into electrical energy, it is necessary to be able to:

Then, be able to either :

Reconstructing images as closely as possible (whatever the environmental conditions may be).

In the blue section of the opposite table, a whole range of algorithms allowing the recreation of images from the data captured by the image sensor can be seen.

In order to do this, very complex operations must be performed in real time, so as to deal with:

Extracting information from the observed setting so as to decide on and launch different measures.

In the yellow part of the opposite table, different algorithms which can analyze an image’s pixels so as to extract information can be seen.

This can involve algorithms:

As you can appreciate, a vision system is not just a simple camcorder that serves only to film a vacation, but is a powerful system composed of, not only an image capture system in different frequency bands, but of an intelligence (analytical) whose applications are broad and applicable in many areas. It also serves in increasing human capacity by having the ability to see what we normally cannot, whilst dealing at a very high frequency which we are also unable to do.